Data science projects often involve complex workflows requiring multiple steps, including data extraction, transformation, model training, and deployment. Manually managing these workflows can be inefficient and error-prone. Apache Airflow is a powerful workflow automation tool that allows data scientists to schedule, monitor, and manage workflows effectively. For those interested in mastering such automation techniques, a data scientist course in Hyderabad provides a strong foundation in workflow orchestration tools like Airflow.

What is Apache Airflow?

Apache Airflow is an open-source platform for orchestrating complex computational workflows. It provides a framework for defining, scheduling, and monitoring data pipelines using Directed Acyclic Graphs (DAGs). Each DAG represents a series of tasks that must be executed in order. With Airflow, data scientists can automate repetitive tasks, ensuring efficiency and consistency. Enrolling in a Data Science Course equips professionals with hands-on experience using Airflow to build scalable and reliable data workflows.

Why Automate Data Science Workflows?

Automation in data science minimises human intervention, reduces errors, and enhances reproducibility. Manual execution of data pipelines can lead to inconsistencies and inefficiencies, particularly when handling large datasets or real-time analytics. Airflow automates the end-to-end workflow, enabling seamless data movement from ingestion to model deployment. Learning Airflow through a Data Science Course enables data professionals to manage complex workflows effectively and optimise machine learning pipelines.

Key Features of Apache Airflow

- Scalability – Airflow supports distributed execution, making it ideal for handling large-scale data science workflows.

- Extensibility – It provides numerous plugins and integrations for cloud platforms, databases, and external APIs.

- Monitoring and Logging – Airflow’s user interface offers real-time tracking of DAGs and task execution status.

- Retry Mechanism – Automatic retries on task failures improve workflow resilience.

- Parameterised Workflows – Dynamic DAGs allow for parameterised execution, making workflows flexible and reusable.

Understanding these features through a data scientist course in Hyderabad enhances one’s ability to implement robust automation strategies in data science.

Setting Up Apache Airflow for Data Science

Setting up Apache Airflow requires installation and configuration. It involves setting up a metadata database, defining environment variables, and configuring execution components. After installation, users can create DAGs that define workflows by writing Python scripts. A course provides step-by-step guidance on setting up and optimising Airflow for data science projects.

Creating a Data Science Workflow with Airflow

A typical data science workflow consists of multiple stages:

- Data Extraction: Pulling data from databases, APIs, or cloud storage.

- Data Cleaning and Transformation: Using libraries like Pandas or Spark to preprocess data.

- Feature Engineering: Creating meaningful features for machine learning models.

- Model Training and Validation: Running machine learning algorithms and evaluating performance.

- Deployment and Monitoring: Deploy the trained model into production and monitor its performance.

Airflow enables seamless automation of these stages by defining tasks and dependencies within DAGs. Mastering these automation techniques through a course prepares professionals to build scalable machine learning workflows.

Benefits of Using Airflow in Data Science

- Improved Workflow Management: Airflow helps organise tasks efficiently, ensuring that dependencies are correctly managed.

- Automated Error Handling: Failed tasks can be retried automatically, reducing downtime.

- Scalability for Big Data: Supports parallel execution, making it suitable for large-scale data processing.

- Integration with Cloud and On-Premise Solutions: Works seamlessly with cloud services like AWS, Google Cloud, and Azure.

- Enhanced Collaboration: Multiple teams can collaborate on workflow automation, ensuring consistency across data pipelines.

Enrolling in a data science course helps professionals understand these benefits and implement Airflow efficiently in real-world scenarios.

Best Practices for Using Airflow in Data Science

- Design Modular DAGs: Keep workflows modular to facilitate debugging and maintenance.

- Use Task Dependencies Wisely: Ensure tasks are properly linked to prevent execution failures.

- Leverage Airflow’s Scheduling Capabilities: Use cron expressions and scheduling intervals to automate workflow execution.

- Optimise DAG Performance: Minimise execution time by optimising resource utilisation and parallel processing.

- Monitor and Log Workflows: Use Airflow’s UI and logging features for real-time monitoring and troubleshooting.

Professionals who undergo a course gain hands-on experience implementing these best practices, ensuring efficient workflow automation.

Real-World Use Cases of Airflow in Data Science

- ETL Pipelines: Automating data extraction, transformation, and loading for business intelligence applications.

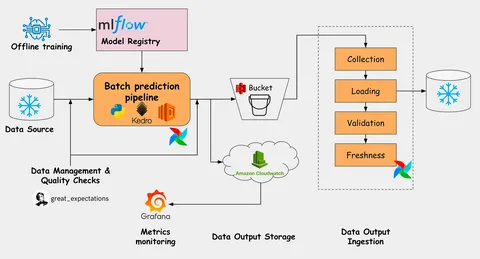

- Machine Learning Pipelines: Managing end-to-end ML workflows from data preprocessing to model deployment.

- Data Quality Monitoring: Automating data validation and anomaly detection tasks.

- Real-time Analytics: Scheduling and processing real-time data streams for insights.

- Marketing and Customer Analytics: Automating customer segmentation, recommendation systems, and personalisation models.

Understanding these use cases through a course prepares professionals to implement Airflow effectively in various domains.

Conclusion

Automating data science workflows with Apache Airflow enhances efficiency, reproducibility, and scalability. As data workflows become increasingly complex, tools like Airflow help streamline processes and optimise machine learning pipelines. Whether managing ETL processes, model training, or real-time analytics, Airflow simplifies orchestration and monitoring. To gain expertise in workflow automation, a course provides the necessary skills to leverage Airflow effectively in real-world projects.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744